Agents in Artificial Intelligence(AI)

Artificial Intelligence (AI) is the study and development of rational agents capable of making decisions and taking actions to achieve optimal outcomes. A person, a machine, a software program, or even a mix of these could be considered a rational agent. These agents operate based on their perception of the environment and their ability to act within it.

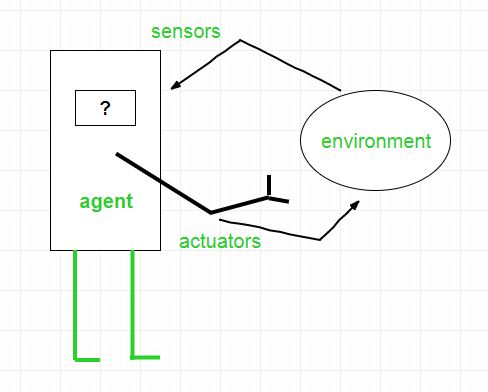

An agent and its surroundings are the two primary parts of an AI system. Agents take in their environment and act accordingly. The environment, in turn, may include other agents, making interaction a critical aspect of AI.

Download New Real Time Projects :-Click here

What Defines an Agent in AI?

An agent can be described as:

- Understanding the environment through sensors.

- Acting on the environment through actuators.

Notably, every agent can perceive its actions, though it may not always be aware of the outcomes.

Agent Composition:

The basic formula to understand an AI agent is:

Agent = Architecture + Agent Program

- Architecture refers to the underlying hardware or system that supports the agent, such as a robot, computer, or camera.

- Agent Program defines the logic and strategies used to process inputs and determine actions.

Types of Agents in AI

Agents can be divided into groups according to their intelligence, complexity, and capacity for making decisions:

1. Simple Reflex Agents

These agents base their actions solely on the current environment’s state, ignoring any historical data. They operate using condition-action rules (e.g., if the condition is true, perform action).

While simple reflex agents are efficient in fully observable environments, they often fail in dynamic or partially observable ones, leading to limitations such as:

- Lack of knowledge of the environment’s unobservable states.

- Inability to adapt to changes without extensive rule updates.

2. Model-Based Reflex Agents

Model-based agents overcome the limitations of simple reflex agents by maintaining an internal state that tracks information about unobservable aspects of the environment.

These agents rely on two main factors to update their state:

- How the world evolves independently.

- The world’s reaction to the agent’s acts.

This model-based approach allows them to handle dynamic and partially observable environments more effectively.

3. Goal-Based Agents

Goal-based agents aim to achieve specific objectives by choosing actions that reduce the distance to their target. They employ planning and decision-making processes to identify optimal paths.

Advantages include:

- Flexibility in modifying goals and adapting to new conditions.

- Clear reasoning behind their decisions.

4. Utility-Based Agents

Utility-based agents go beyond simply achieving goals. They evaluate various options and select actions based on a utility function, which measures the degree of “happiness” or satisfaction achieved by each state.

Key features:

- Consider trade-offs between multiple objectives (e.g., speed, safety, cost).

- Handle uncertainty by maximizing expected utility.

5. Learning Agents

Learning agents can improve their performance over time by learning from experience. They consist of four main components:

- Learning Element: Analyzes data and improves strategies.

- Critic: Provides feedback on performance.

- Performance Element: Executes actions based on learned knowledge.

- Problem Generator: Suggests exploratory actions for better learning opportunities.

Properties of AI Environments

An agent’s success is influenced by the environment it operates in. These environments can be described by several properties:

- Observable vs. Partially Observable:

- Fully observable environments provide complete information to the agent.

- Partially observable environments require inference and decision-making under uncertainty.

- Deterministic vs. Non-Deterministic:

- Deterministic environments have predictable outcomes based on current states and actions.

- Non-deterministic environments introduce randomness and uncertainty.

- Static vs. Dynamic:

- While the agent is operating, static environments don’t change.

- Dynamic environments evolve over time, requiring agents to adapt continuously.

- Discrete vs. Continuous:

- Discrete environments have distinct states (e.g., a chessboard).

- Continuous environments, like driving, involve fluid changes.

- Single-Agent vs. Multi-Agent:

- Single-agent environments involve only one decision-maker.

- Multi-agent environments involve interaction and competition among agents.

- Accessible vs. Inaccessible:

- Accessible environments provide full access to their states.

- Inaccessible environments limit the agent’s observational capabilities.

- Episodic vs. Sequential:

- Episodic environments consist of independent episodes without long-term dependencies.

- Sequential environments require agents to consider past actions when planning future ones.

Measuring Intelligence: The Turing Test

The Turing Test evaluates a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. In this test:

- An examiner interacts with both a human and a machine, unaware of which is which.

- If the examiner cannot distinguish between them based on their responses, the machine is considered intelligent.

PHP PROJECT:- CLICK HERE

INTERVIEW QUESTION:-CLICK HERE

Complete Advance AI topics:- CLICK HERE

Complete Python Course with Advance topics:- CLICK HERE

- types of agents in artificial intelligence ai

- What is the Composition of Agents in Artificial Intelligence(AI)

- types of agents in ai

- agents in artificial intelligence ai geeksforgeeks

- agents in artificial intelligence ai pdf

- agents in artificial intelligence ai examples

- rational agent in ai

- intelligent agent in ai

- Agents in Artificial Intelligence

- structure of agents in artificial intelligence